QCT and Supermicro among first to use server specification enabling over 100 system configurations to accelerate AI, HPC and Omniverse workloads

COMPUTEX—To meet the diverse accelerated computing needs of data centers around the world, NVIDIA today introduced the NVIDIA MGX™ server specification, which provides system builders with a modular reference architecture to quickly and cost-effectively create more than 100 server variants to meet a wide range of AI, high-performance computing, and Omniverse applications.

ASRock Rack, ASUS, GIGABYTE, Pegatron, QCT and Supermicro will adopt MGX, which can cut development costs by up to three-quarters and cut development time by two-thirds to just six months.

“Enterprises are looking for more accelerated computing options when designing data centers that meet their specific business and application needs,” said Kaustubh Sanghani, vice president of GPU products at NVIDIA. “We built MGX to help organizations jump-start enterprise AI, saving them significant amounts of time and money.”

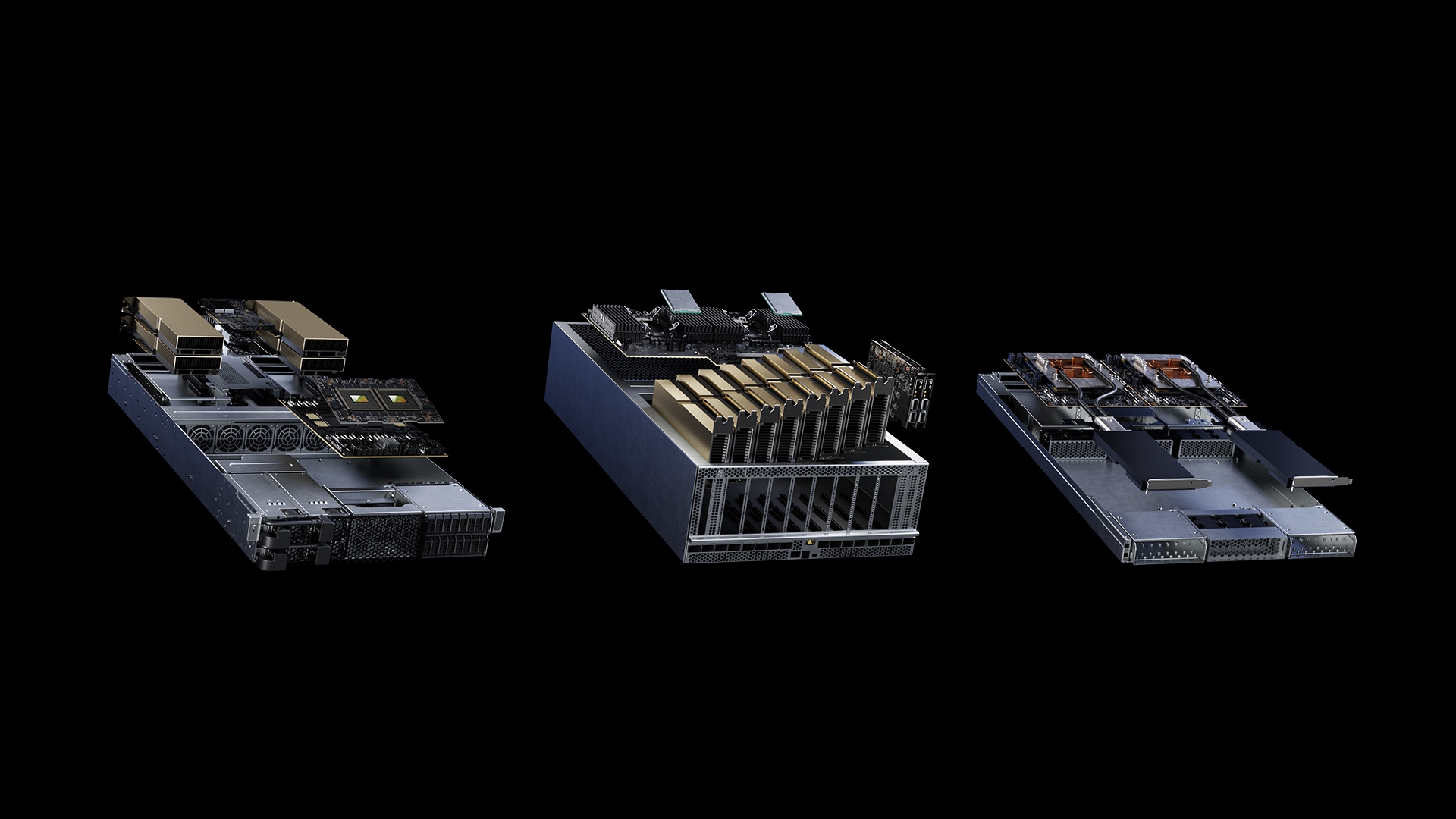

With MGX, manufacturers start with a base system architecture optimized for accelerated computing for the server chassis, then select GPUs, DPUs, and CPUs. Design variations can address unique workloads, such as HPC, data science, large language models, edge computing, graphics and video, enterprise AI, and design and simulation. Multiple tasks like AI training and 5G can be handled on a single machine, while upgrades to future hardware generations can be frictionless. MGX can also be easily integrated into the cloud and enterprise data centers.

Collaboration with industry leaders

QCT and Supermicro will be first to ship, with MGX designs appearing in August. Supermicro’s ARS-221GL-NR system, announced today, will include the NVIDIA Grace™ CPU Superchip, while QCT’s S74G-2U system, also announced today, will utilize the NVIDIA GH200 Grace Hopper Superchip.

Additionally, SoftBank Corp. plans to deploy multiple hyperscale data centers across Japan and use MGX to dynamically allocate GPU resources between Generative AI and 5G applications.

“As Generative AI permeates business and consumer lifestyles, building the right infrastructure at the right cost is one of the biggest challenges facing network operators,” said Junichi Miyakawa, president and CEO of SoftBank Corp. “We expect that NVIDIA MGX can address these challenges and enable multipurpose AI, 5G, and more depending on real-time workload requirements.

Different designs for different needs

Data centers increasingly need to meet requirements for both increasing processing capabilities and reducing carbon emissions to combat climate change while keeping costs down.

Accelerated compute servers from NVIDIA have long provided exceptional computing performance and energy efficiency. Now, MGX’s modular design offers system manufacturers the ability to more effectively meet each customer’s specific budget, power delivery, thermal design, and mechanical requirements.

Multiple form factors provide maximum flexibility

MGX works in many form factors and is compatible with current and future generations of NVIDIA hardware, including:

- Chassis: 1U, 2U, 4U (air or liquid cooled)

- GPU: Full portfolio of NVIDIA GPUs, including the latest H100, L40, L4

- CPU: NVIDIA Grace CPU Superchip, Grace Hopper GH200 Superchip, x86 CPU

- Network: NVIDIA BlueField®-3 DPUs, ConnectX®-7 network adapters

MGX differs from Nvidia HGX™ as it offers flexible, cross-generation compatibility with NVIDIA products to ensure system builders can reuse existing designs and easily adopt next-generation products without costly redesigns. Instead, HGX relies on a multi-GPU baseboard connected to NVLink® tailored for scalability to create the ultimate in AI and HPC systems.

Software to further drive acceleration

Beyond hardware, MGX is supported by NVIDIA’s full software stack, enabling developers and businesses to build and accelerate AI, HPC, and other applications. This includes NVIDIA AI enterprisethe NVIDIA AI Platform software tier, which includes over 100 fully supported frameworks, pre-trained models, and developer tools to accelerate AI and data science for enterprise AI development and deployment.

MGX is compatible with Open Compute Project and Electronic Industries Alliance rack servers, for rapid integration into enterprise and cloud data centers.

Watch NVIDIA founder and CEO Jensen Huang discuss the specifics of the MGX server in his talk at COMPUTEX.

#NVIDIA #MGX #offers #system #builders #modular #architecture #meet #diverse #accelerated #computing #worlds #data #centers